What is Multiscale Modelling?

In 1959 Richard Feynman gave a talk called “There’s plenty of room at the bottom.” If you’ve never heard of him then read Genius by James Gleik as soon as humanly possible. This talk dealt with, it is no surprise to learn, the technology of the very small. I’m not sure that the original talk can be found on the internet, and that’s a problem with internet, not Feynman, as I’m sure there’s not much that Kim Kardashian has done that isn’t covered in rather too much detail. A 1984 re-run of the talk – “Tiny Machines” – is on YouTube and worth watching. As are many other Feynman videos. But I’m wandering away from my point.

So in 1959 and 1984 there was plenty of room at the bottom. And in 2015 its length scales that seem to dominate the outlook for simulation. So lets start at the bottom. Molecular modelling is fairly alien to me – when I see the systems that do it I always think of school chemistry text books, but if there’s one thing that I’m aware of, it’s that the arrangement of those coloured spheres on sticks that pretty much defines what we need to enter into the boxes in the property sections of finite element codes. So one way of determining properties might be to model the molecular structure in something like BIOVIA. (And I’m aware that this might start to read like an advertorial, but that really isn’t my intention.) Long winded, difficult, expensive and probably not as relevant as an Instron in nearly every case. But in situations where molecular modelling is a routine activity it isn’t done simply to predict properties. Its done to engineer them. And that means we can influence product design and function at a smaller length scale than we did previously, and add yet more scope for creative problem solving.

This has, I am reliably informed, already been done to some degree with elastomers for car tyres. Early days I’m sure, but something of an indication of where we are headed. In plenty of room Feyman says “At the atomic level, we have new kinds of forces and new kinds of possibilities, new kinds of effects. The problems of manufacture and reproduction of materials will be quite different. I am, as I said, inspired by the biological phenomena in which chemical forces are used in a repetitious fashion to produce all kinds of weird effects (one of which is the author).” So whilst the practice of using molecular modeling as an underpinning technology for larger scale simulation is somewhat new, the idea, the dream, is older than me. There’s a reason his biography is called “Genius”.

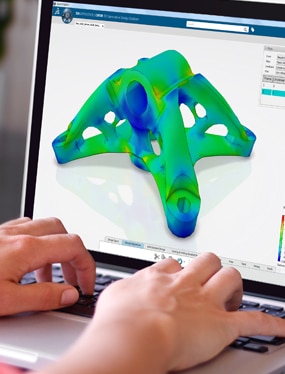

Currently my days, and above all my working thought processes, are dominated by multiscale modeling. Not at a molecular level, but by composites again. The properties of composite structures result from the combination of various constituents; material and glue as I once described it to a much younger son than the one who couldn’t be made to get up this morning. So again you can use testing to describe the material to a system which works at a larger length scale, or you can model it to drive a fuller, more extensive representation and understanding of what goes on in extremis; and if you are playing the game properly use the approach to engineer better, nearer optimal, more effective materials. This isn’t generally done, in fact it isn’t done anywhere near enough. But this technology exists, can be purchased in a number of forms, and seems to be largely ignored by engineering companies in the UK. It’s something that will be driving the technical agenda at SSA in the future, if not already.

And so to the other end of the length scale. I mentioned the Airbus models at the SCC in my last blog. They never fail to impress, and I’m sure if they were shown off more frequently, so would the models from other aircraft manufacturers and automotive OEMS. As a bit of an aside it’s a shame from some respects that we rarely get to see these. Anyway, models have got ever larger and even to handle displaying results, let alone calculating them, we have to employ parallel and massively parallel computing systems. So to round off this blog posting a paragraph from an article by Danny Hillis (Google him if you don’t know)…

“One day when I was having lunch with Richard Feynman, I mentioned to him that I was planning to start a company to build a parallel computer with a million processors. His reaction was unequivocal, “That is positively the dopiest idea I ever heard.” For Richard a crazy idea was an opportunity to either prove it wrong or prove it right. Either way, he was interested. By the end of lunch he had agreed to spend the summer working at the company.”

So if there was plenty of room at the bottom in 1959, it isn’t exactly overcrowded in 2015. Multiscale modelling approaches are the next big thing to hit simulation, and people and organisations that embrace that will be the ones to move prediction and engineering forward in the next few years.